We have read multiples replies and discussions around Xbox One (Durango)’s memory system throughout the internet, due to we would like to share this information with all of you. In this article we expose the different types of memories that Durango has and how this memories work together with the rest of the system.

The central elements of the Durango memory system are the north bridge and the GPU memory system. The memory system supports multiple clients (for example, the CPU and the GPU), coherent and non-coherent memory access, and two types of memory (DRAM and ESRAM).

Memory clients

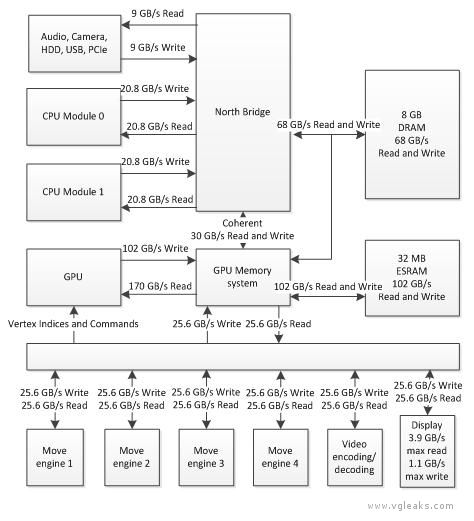

The following diagram shows you the Durango memory clients with the maximum available bandwidth in every path.

Memory

As you can see on the right side of the diagram, the Durango console has:

- 8 GB of DRAM.

- 32 MB of ESRAM.

DRAM

The maximum combined read and write bandwidth to DRAM is 68 GB/s (gigabytes per second). In other words, the sum of read and write bandwidth to DRAM cannot exceed 68 GB/s. You can realistically expect that about 80 – 85% of that bandwidth will be achievable (54.4 GB/s – 57.8 GB/s).

DRAM bandwidth is shared between the following components:

- CPU

- GPU

- Display scan out

- Move engines

- Audio system

ESRAM

The maximum combined ESRAM read and write bandwidth is 102 GB/s. Having high bandwidth and lower latency makes ESRAM a really valuable memory resource for the GPU.

ESRAM bandwidth is shared between the following components:

- GPU

- Move engines

Video encode/decode engine. System coherency

There are two types of coherency in the Durango memory system:

- Fully hardware coherent

- I/O coherent

The two CPU modules are fully coherent. The term fully coherent means that the CPUs do not need to explicitly flush in order for the latest copy of modified data to be available (except when using Write Combined access).

The rest of the Durango infrastructure (the GPU and I/O devices such as, Audio and the Kinect Sensor) is I/O coherent. The term I/O coherent means that those clients can access data in the CPU caches, but that their own caches cannot be probed.

When the CPU produces data, other system clients can choose to consume that data without any extra synchronization work from the CPU.

The total coherent bandwidth through the north bridge is limited to about 30 GB/s.

The CPU requests do not probe any other non-CPU clients, even if the clients have caches. (For example, the GPU has its own cache hierarchy, but the GPU is not probed by the CPU requests.) Therefore, I/O coherent clients must explicitly flush modified data for any latest-modified copy to become visible to the CPUs and to the other I/O coherent clients.

The GPU can perform both coherent and non-coherent memory access. Coherent read-bandwidth of the GPU is limited to 30 GB/s when there is a cache miss, and it’s limited to 10 – 15 GB/s when there is a hit. A GPU memory page attribute determines the coherency of memory access.

The CPU

The Durango console has two CPU modules, and each module has its own 2 MB L2 cache. Each module has four cores, and each of the four cores in each module also has its own 32 KB L1 cache.

When a local L2 miss occurs, the Durango console probes the adjacent L2 cache via the north bridge. Since there is no fast path between the two L2 caches, to avoid cache thrashing, it’s important that you maximize the sharing of data between cores in a module, and that you minimize the sharing between the two CPU modules.

Typical latencies for local and remote cache hits are shown in this table.

| Remote L2 hit | approximately 100 cycles |

| Remote L1 hit | approximately 120 cycles |

| Local L1 Hit | 3 cycles for 64-bit values 5 cycles for 128-bit values |

| Local L2 Hit | approximately 30 cycles |

Each of the two CPU modules connects to the north bridge by a bus that can carry up to 20.8 GB/s in each direction.

From a program standpoint, normal x86 ordering applies to both reads and writes. Stores are strongly ordered (becoming visible in program order with no explicit memory barriers), and reads are out of order.

Keep in mind that if the CPU uses Write Combined memory writes, then a memory synchronization instruction (SFENCE) must follow to ensure that the writes are visible to the other client devices.

The GPU

The GPU can read at 170 GB/s and write at 102 GB/s through multiple combinations of its clients. Examples of GPU clients are the Color/Depth Blocks and the GPU L2 cache.

The GPU has a direct non-coherent connection to the DRAM memory controller and to ESRAM. The GPU also has a coherent read/write path to the CPU’s L2 caches and to DRAM.

For each read and write request from the GPU, the request uses one path depending on whether the accessed resource is located in “coherent” or “non-coherent” memory.

Some GPU functions share a lower-bandwidth (25.6 GB/s), bidirectional read/write path. Those GPU functions include:

- Command buffer and vertex index fetch

- Move engines

- Video encoding/decoding engines

- Front buffer scan out

As the GPU is I/O coherent, data in the GPU caches must be flushed before that data is visible to other components of the system.

The available bandwidth and requirements of other memory clients limit the total read and write bandwidth of the GPU.

This table shows an example of the maximum memory-bandwidths that the GPU can attain with different types of memory transfers.

| Source memory | Destination memory | Maximum read bandwidth (GB/s) | Maximum write bandwidth (GB/s) | Maximum total bandwidth (GB/s) |

| ESRAM | ESRAM | 51.2 | 51.2 | 102.4 |

| ESRAM | DRAM | 68.2* | 68.2 | 136.4 |

| DRAM | ESRAM | 68.2 | 68.2* | 136.4 |

| DRAM | DRAM | 34.1 | 34.1 | 68.2 |

Although ESRAM has 102.4 GB/s of bandwidth available, in a transfer case, the DRAM bandwidth limits the speed of the transfer.

ESRAM-to-DRAM and DRAM-to-ESRAM scenarios are symmetrical.

Move engines

The Durango console has 25.6 GB/s of read and 25.6 GB/s of write bandwidth shared between:

- Four move engines

- Display scan out and write-back

- Video encoding and decoding

The display scan out consumes a maximum of 3.9 GB/s of read bandwidth (multiply 3 display planes × 4 bytes per pixel × HDMI limit of 300 megapixels per second), and display write-back consumes a maximum of 1.1 GB/s of write bandwidth (multiply 30 bits per pixel × 300 megapixels per second).

You may wonder what happens when the GPU is busy copying data and a move engine is told to copy data from one type of memory to another. In this situation, the memory system of the GPU shares bandwidth fairly between source and destination clients. The maximum bandwidth can be calculated by using the peak-bandwidth diagram at the start of this article.

If you want to see how all of this works, just read the example we’ve written for all of you.

![[Rumor] GTA 6 to be digital-only at launch… to avoid story spoilers](https://vgleaks.com/wp-content/uploads/2024/03/gta-6-150x150.jpg)

![[Rumor] Resident Evil: Code Veronica remake could be announced this year and launch in 2027](https://vgleaks.com/wp-content/uploads/2026/01/resident-evil-code-veronica-150x150.jpg)